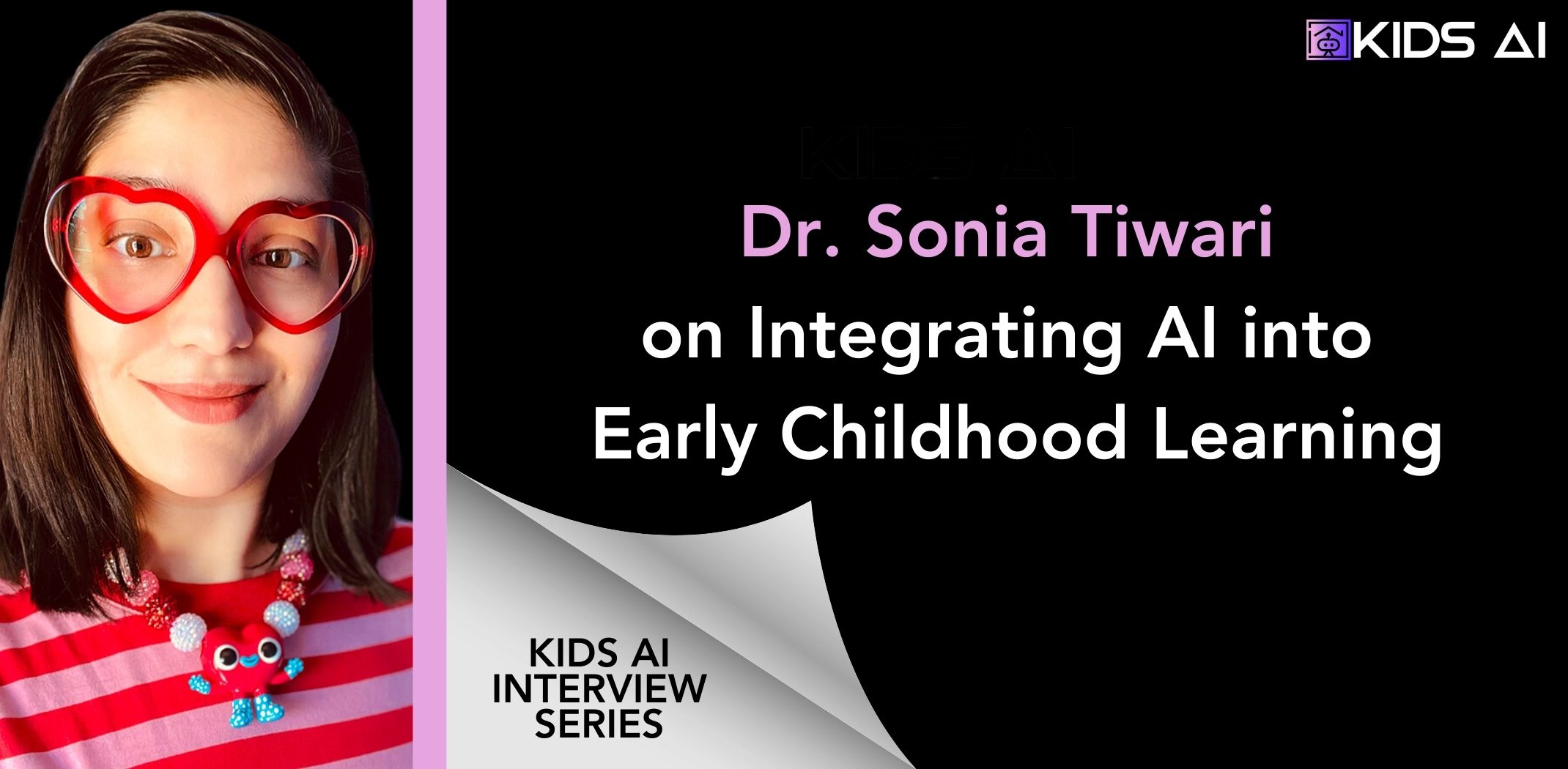

In this edition of the Kids AI interview series, Evren Yiğit interviews with Dr. Sonia Tiwari, a pioneer in Learning Experience Design and Research, specializing in the integration of AI within early childhood education. Dr. Tiwari’s innovative work in developing ethical AI tools and virtual influencers for children sets her apart in the field. Her commitment to creating engaging and safe educational products offers invaluable insights for anyone interested in the responsible application of AI in education.

Evren Yiğit: Sonia, your unique background in animation and education significantly shapes your approach to creating educational products. How has this combination influenced your methodologies and outcomes?

Dr.Sonia Tiwari: Learning Experience Design as a field has been the perfect converging point for my design and research skills. Both visual design and learning design involve creative storytelling. Animation uses characters, backgrounds, sounds, and narrative plot structures to tell stories. Education uses learning theories, educational research, learning resources, learner feedback etc. to tell a story. Ultimately, it’s about knowing how to strike a balance – a visually stunning AND educationally sound product or service is key, instead of the typical great educational idea poorly executed design-wise, or too many bells and whistles visually without any solid educational foundation.

Evren Yiğit: That’s a good point. We believe children should be at the center of the design process, ensuring their voices and needs are met. Ethical considerations are also central to your design of virtual influencers and AI tools for children. How do you balance the need for engagement with ensuring safety, and what key ethical considerations guide your design process?

Dr.Sonia Tiwari: Assessing genuine NEED is the first ethic for me. Do I really need to use Virtual Influencers or AI tools in a particular setting – what is the context of the learner population, their challenges, other solutions? To find these answers I lean on the guidance provided by Office of Educational Technology (OET) of the U.S. Department of Education – such as conducting literature reviews to see what’s been done before, developing a logic model or rubric, pilot testing, making observations in classrooms/homes/museums or other settings where kids interact with AI, doing some pre-tests and post-tests with AI tools, and designing experimental studies such as Randomized Control Trials to truly understand if there’s a significant different between intervention and control groups. Once a need is established, I delve into other ethics such as protecting personal data, using developmentally appropriate language and content, transparency and disclosure around how the tools are made and used, and making sure the LLM itself has support systems for wellbeing, inclusion, fairness, and accessibility.

Evren Yiğit: At Kids AI, we strongly advocate for ‘designing with, not for’ children, ensuring their voices and needs are central to the development of children’s products. Similarly, the concept of ‘co-creation’ is pivotal in your work. Could you explain how this approach enhances educational outcomes for children?

Dr.Sonia Tiwari: Co-design is essential at multiple stages of designing any GenAI product or service for kids. Co-design at the product/service design level is crucial because as adults, we can’t rely on our current lived experience to do guesswork around what children would like – so it makes sense to test things with the actual audience. Co-design at the usage level means that GenAI leaves room for children to build something in collaboration, instead of passively demanding something from technology. A great example of this collaboration is the Brickit App that uses AI to scan available LEGO bricks and offers potential structures children could build with it. In testing this app with kids, I found that almost no one followed the generative designs as-is, but used it as a general direction or inspiration. Another Example is the Animated Drawings by Meta Demo Lab, where kids can scan their hand-drawn characters with a phone or tablet, and the AI adds generative rig and animation to bring these drawings to life. That’s the beauty of co-design, because there’s still room for human ingenuity and creativity to thrive!

Evren Yiğit: That’s fascinating! During one of your recent workshops, you explored co-creation with children using GenAI at Harvard GSE. What were some of the most surprising or innovative outcomes from these sessions?

Dr.Sonia Tiwari: The LDIT students at Harvard GSE were already familiar with several AI tools such as custom GPTs and writing thoughtful prompts for text-to-text and text-to-image generative AI. From the workshop they picked up some new ideas and modalities of generative outcomes such as text-to-speech, text-to-video, image-to-video, and video-to-video. I saw some students built a storytelling GPT based on any theme input by the user, some were combining ideas of having a tangible toy interact with digital generative content for kids creating a hybrid or “phygital” experience with AI, some international students were considering how they might use bilingual content and cultural context to tailor GenAI learning experiences to a specific audience, and some were considering how AI’s ability to see, hear, and talk in real-time could be applied to community makerspaces when building complex projects. In the workshop we only focused on conceptual mockups and/or initial prototypes. I hope that if any ideas stood out to the students, they’ll continue to develop them!

Evren Yiğit:These sound like very innovative ideas. You have provided LX design consultations to a range of EdTech startups and educational innovators. What common challenges do these organizations face when incorporating AI into their products, and how do you assist them in overcoming these hurdles?

Dr.Sonia Tiwari: I think the biggest challenge, particularly in the Silicon Valley, is that trend-chasing often overtakes educational goals. GenAI is amazing and has immense potential, but that doesn’t mean anyone with GenAI development skills can add some feature to a foundationally weak educational app and call it “innovative”. We need designers to brainstorm in collaboration with children, educators, researchers, and caregivers to figure out challenges that need to be addressed, and then figure out if AI is a potential solution. We also need to figure out where human intervention is essential and where it’s ok to outsource something to AI – holding some space for human ingenuity and creativity. To a hammer, everything is a nail. And right now, many edtech startups are one step away from becoming a “GenAI company” – when GenAI is just an advanced feature not the core concept for any industry, not just education.

When consulting with these startups I always go back to the question of WHY: why certain GenAI features are needed, are they genuinely helping, is there something simpler that would solve the problem, etc. Sometimes these questions can be answered after a small focus group or play-testing session, sometimes the product/service idea is so abstract that it takes a few iterations to even get it to a stage where it can be tested. I mostly help with bringing clarity on what unique problem needs solving for kids, and if the proposed business endeavor is the best way to solve it. From there, we set some goals and design a product roadmap.

Evren Yiğit: Thank you for sharing that. You have an extensive and very impressive portfolio in developing educational toys and AI-enhanced activities. Our readers would love to hear examples of projects that exemplify your approach to integrating AI in enhancing children’s educational experiences?

Dr.Sonia Tiwari: Thank you! One of my favorite examples is the ‘Craft with Oki Pie’ AI tool I designed for community makerspaces for children. Oki Pie is a fictional heart-shaped character who loves making things for and with her family and friends. The children at the makerspaces I work with, are familiar with this character through short stories and illustrations I’ve shared with them in the past.

The AI tool acts as an interactive extension to the stories, and a way for children to brainstorm for project ideas with Oki. In community makerspaces we have access to donated craft supplies such as cardboard boxes, plastic bottles, unused or remnant nail paints, or pieces of fabric. Prior to designing the AI tool, I noticed how makerspace facilitators were struggling to come up with activity ideas based on random materials – especially when kids from a wide age-range and diverse interests stop by and expect to make something personally meaningful. The AI tool reverse-engineers project ideas based on available materials. So now, the facilitators can input the child’s grade level, interests, materials of choice, and number of project ideas – and the AI tool (built on Playlab.ai) would give them project ideas broken down into simple steps. Many community makerspaces across the US are now trying this out, and I always love hearing about how it’s being used. My next goal is to do more formal research around this tool.

Evren Yiğit: That’s truly inspiring. What do you consider the most exciting or promising trends in AI applications for children’s education in the coming years? How should educators and developers prepare to adapt to these innovations?

Dr.Sonia Tiwari: I think educators and developers need to be cautiously optimistic about AI. We need to innovate, without letting our guard down and putting safeguards in place right from the conceptual phase of any AI application. Whether we like it or not, AI is here and once we radically accept this change – we can move on to objectively designing, testing and reiterating AI applications till we find the most optimal and safe learning experience for children. I remember the transition of a world pre and post internet as a kid, which felt like an equally massive change in the way humanity functions. If you investigate academic journal archives from early 1990s, researchers ran a publication mill answering whether internet is making kids dumber or smarter. There’s a similar publication mill running now – turning basic prompt engineering strategies into a series of special issue publications. I’d recommend that we rise above pseudo-intellectualism around AI (being able to use the LLM lingo and creating flashy tools) and refocus on the WHY. Why are we using AI in children’s lives, and what will be the role of the human educators, caregivers, researchers, and designers in this journey?

Evren Yiğit: At Kids AI, we are deeply committed to the responsible, ethical, transparent, and beneficial use of AI in children’s media and education. What do you believe are the most important considerations for companies to keep in mind to ensure they adhere to these principles when developing AI-enabled educational tools?

Dr.Sonia Tiwari: Anyone in a leadership position at a children’s AI company, must realize this is a vulnerable population without the power to fend for themselves. That alone should raise the bar for being responsible. Take some time to read ideas of and consult with thought leaders in this space. Collaborate with children, caregivers, researchers, and educators. Be mindful about the data you collect, be transparent about your process, and be willing to change when you sense any discomfort in the community. The laws around GenAI are still developing at a different pace and with different levels of rigidity around the world. We can’t wait around for laws to offer concrete ethical guidance, so consult with ethicists and industry experts from an early stage.

Kids AI Closing Insights

The insights shared by Dr. Sonia Tiwari in this interview highlight the profound impact that ethical and responsible AI can have on children’s education. Her emphasis on co-creation and designing with, not for, children resonates deeply with Kids AI’s mission to place children’s voices at the center of AI development. The examples of AI-enhanced activities and virtual influencers she provides demonstrate the incredible potential for personalized and inclusive learning experiences. At Kids AI, we are committed to ensuring that these technologies are not only innovative but also safe, transparent, and beneficial. Dr. Tiwari’s work exemplifies these principles, demonstrating how AI can be a powerful tool for fostering creativity, inclusivity, and ethical engagement in educational settings. Her perspective on ‘being cautiously optimistic’ about AI beautifully encapsulates the balanced approach we strive to promote at Kids AI.